I hear these requests all the time. “What are the best agile metrics?”, “How can we measure an agile team?” and “I know we can’t just measure agile. . . but, what should be on an “organizational agility checklist?”

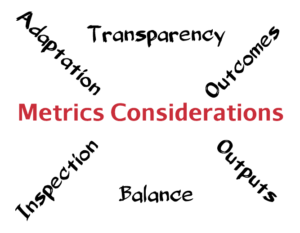

There are so many places you can go with these questions and there are even various companies selling ways to measure agile organizations and agile teams. When someone asks me about agility checklists or agile metrics, I tend to start with a few core themes of elements. I use these themes to have conversations with the people who want the measurements and the people who will be measured (before anyone starts using them).

Basic agile stuff: Whatever basic agile stuff might be important (experiments, improving via retros, delivering features, etc.). Since this is the one people focus on the most, you can find more out there on this one if you don’t already have ideas. A lot of basic agile measurement-checklist items tend to be focused on outputs, which are okay, but see the section below on outcomes and outputs.

Transparency: I always look to include some items that focus on transparency. I find that transparency is a great equalizer to best practices (don’t limit yourself with best practices) – when done right, they go well together. Elements like “The team is transparent with leadership about the impact of sprint interruptions” and “the team is transparent with each other about internal team challenges” are good starting points to discuss these ideas and figure out how you will know.

Balance: Balance is key. I want items for the leaders as well. “Leaders reactions to changes help to increase trust with the team” (can be a fun one to discuss!). I want items that are clear, yet have some variability in them (is that even possible?). I like to make them simple enough that people can agree that doing the opposite would be a bad thing. Balance is critical for checklists and metrics because it allows you to look at multiple focal points that impact an outcome.

Outcomes and outputs: I do see value in both outcomes and outputs. An outcome is an end goal that drives your organization vs an output that can (but does not always) drive outcomes. An output might be lines of code per day (blah) or features delivered in a sprint (I don’t dislike this one as long as it is balanced with others). These might be useful to measure as an output, but the outcomes would be the results of those outputs. We want to look at the outcomes to know if the outputs are achieve the results we need as an organization. This is another reason you need balance.

For example, if I look at the output of features delivered in a sprint as a potential factor that helps us achieve the business driving outcome of 5% new customer growth. There may be many factors that help us achieve this outcome and the output of delivering features in a sprint (specific to that outcome of course) is just one of them.

The features in the sprint may have been focused on a new marketing campaign or features to make it easier to get referrals, however that does not mean those achieved the outcome of 5% new customer growth. Even if you increased your referrals by 300% (another output), that does not mean you will have 5% new customer growth. Another output that might impact that outcome might be measured as percentage of customers followed-up with after initial sale. We could measure that and track it, but like the increase in referrals, just because we follow-up with 100% of customers does NOT mean that we will hit out outcome of 5% new customer growth. (Note that even the outcome we are talking about still needs more work because we don’t just want new customers, we want new customers that we retain for as long or longer than current customers – assuming we are happening with out current customer retainment. Just realize you can always peel the onion more.)

Realize that it can be perfectly fine for a team to be working on features that are focused on outputs! Trying to force small features to have outcomes generally does not work. Just focus on continuing to look at your outcomes and make sure the items being delivered are moving those, over time.

Inspection and Adaptation: The last element I’ll mention is inspection and adaptation. I like to look for ways to add agile checklist items or agile metrics that are focused on improving the checklist or metrics. This might sound a funny at first, but inspect and adapt! You know that there will be change, so build that into whatever you are creating from the start. Adding elements that are aimed at improving the agile metrics over time (adding, changing, deleting items) helps acknowledge from the beginning that there is no perfect answer.

In summary, look at the problems agile checklists or agile metrics might create and look at the concerns you might have with them, then find ways to hack the checklist against those problems.

Thanks Jake – great discussion on outcomes and outputs. I like OKRs in this area since you still show the outputs the team is producing, while looking at whether it moves the needle on the expected outcomes. The focus on both gives team credit for the work while trying to move away from feature factories.

Kevin, I was wondering if someone would bring up OKRs! OKRs are interesting, since I see people doing them different ways. If done well, they do that. But I’m also finding people just using “OKR” to do whatever they have been doing all along (that was not working). Have you run across that yet? It sounds like they have been working well for you?